All Eyes on Rafah.

Edition 33: Message of hope is the Internet's most viral AI image. Two different artists are claiming credit.

June 4, 2024

Introduction - All Eyes on Raffah

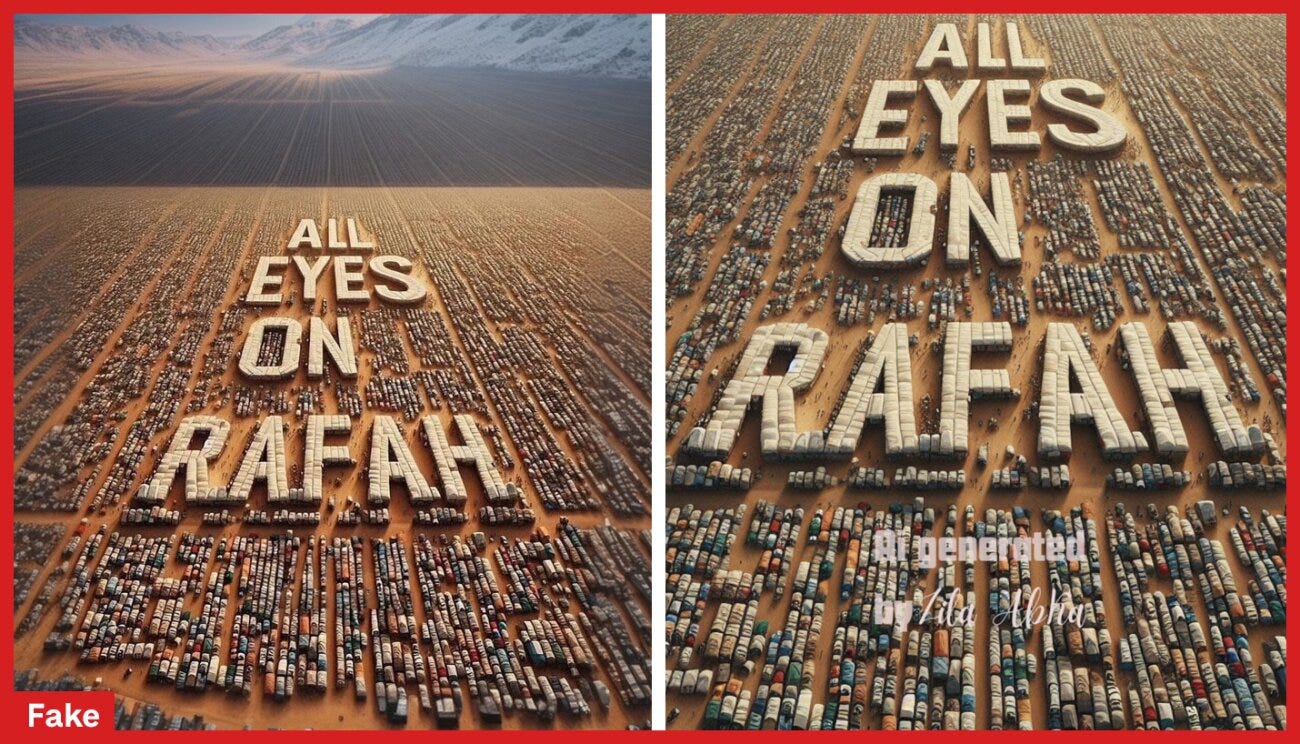

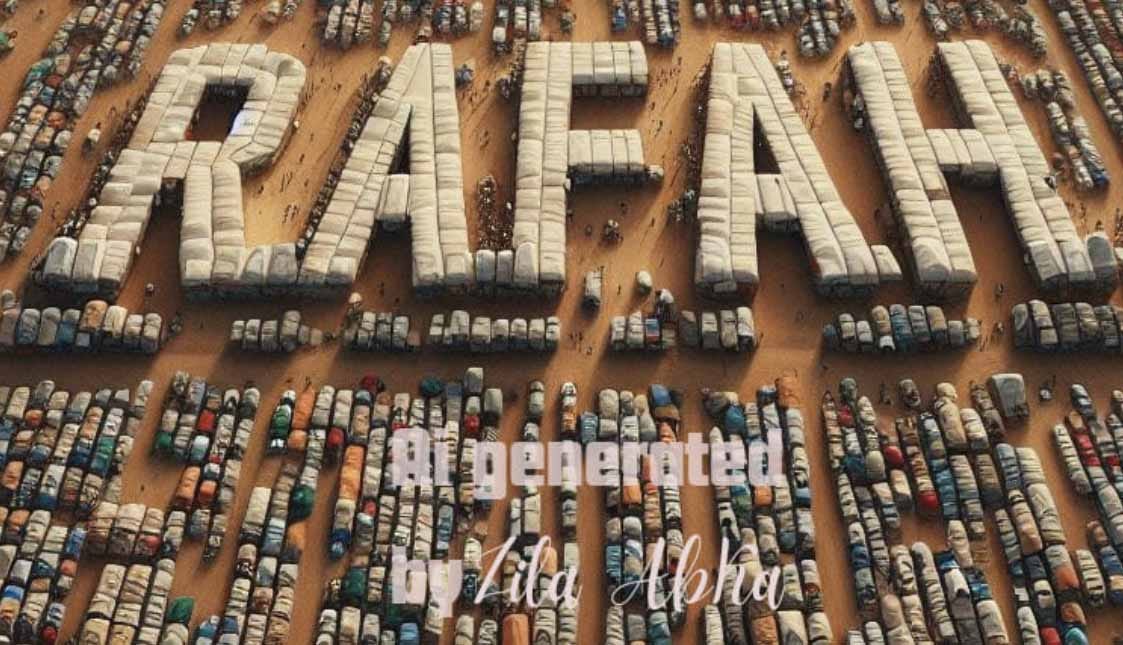

In recent weeks, a striking AI-generated image depicting the Palestinian city of Rafah has overrun the internet, shared millions of times across social media platforms. The image, featuring rows of tents with the text "All Eyes on Rafah" spelled out, has become a rallying cry highlighting the present human condition in Gaza.

However, while this AI story greatly pales by comparison to a day in the life there, the story behind this viral image is a complex one, with two different Malaysian artists both claiming credit for its creation.

Ready Player One

The origins of the image below can be traced back to February 2024, when 39-year-old science teacher and AI art hobbyist Zila AbKa was experimenting with Microsoft's Image Creator. AbKa, who is also a pro-Palestinian activist, generated an image depicting the tent encampments in Rafah, adding the text "All Eyes on Rafah" - a phrase that had gained prominence after a World Health Organization representative used it to draw attention to the displacement crisis in the region.

AbKa shared the original AI-generated image, complete with watermarks indicating it was AI-created and crediting her as the artist, on the Facebook group "Prompters Malaya" - a community of over 250,000 mostly Malaysian AI enthusiasts. However, the image did not gain significant traction at the time.

Fast forward to last week, when AbKa noticed a very similar image going viral on Instagram, but with one crucial difference - the watermarks identifying her as the creator had been removed. The viral version also featured an expanded background with snow-capped mountains, suggesting it had been further edited and manipulated.

This edited version was then amplified by high-profile influencers and celebrities, including Dua Lipa and Bella Hadid, leading to it being shared nearly 50 million times on Instagram alone.

Ready Player Two

The person behind the account 'Shahv4012.'

A 21-year-old Malaysian college student named Amirul Shah, who goes by the Instagram handle @Shahv4012, claims he is “pretty sure” he created this viral version using Microsoft's AI tools. Shah says he was unaware of AbKa's earlier version and was simply trying to use AI to raise awareness about Gaza.

Per NPR:

When Shah was reached for an interview, he denied copying Abka’s creation. Instead, he shared a different version of events.

Shah, a 21-year-old college student in Kuala Lumpur, Malaysia’s capital, has not previously spoken out about his process.

A photography enthusiast, Shah, says he was toying around with an AI image generator recently. He thinks he used Microsoft’s Image Creator, the same service Abka used, but he claims he can’t remember.

When he added it to an Instagram "template," it ricocheted around the world, as influencers and celebrities like Dua Lipa and Bella Hadid amplified it to their millions of followers.

Intellectual Property Protections & Transparency

The competing claims highlight the complexities of authorship and ownership in an era where AI-generated content is becoming prevalent and widely shared online. The U.S. Copyright Office has rejected copyright protection for AI-generated images, as they lack human authorship.

Moreover, the removal of watermarks and lack of transparency around the image's origins illustrate ethical concerns about proper crediting and potential misinformation risks with viral AI content. As this incident shows, an AI-generated image can be easily edited, re-shared, and its provenance obscured, allowing it to take on new meanings and spread rapidly online with little accountability.

The viral spread of the "All Eyes on Rafah" image demonstrates both the power of AI-generated visuals to raise awareness around important issues and the unresolved challenges surrounding their creation, distribution and rights management in the digital age. More NPR. They tried to re-create this same image just as Amirul Shah allegedly accomplished; a more-or-less identical copy.

This Guy is Being Less Than Straightforward

From NPR:

Technologists say that generating the same exact AI image twice is exceedingly unlikely.

In dozens of attempts to recreate the image using Microsoft’s Image Creator, NPR was not able to prompt the tool to create a visual that came close to the viral one. Most of the time, the tool struggled to correctly spell “All eyes on Rafah,” a limitation of many AI image generators, which tend to depict words misspelled or warped in some way.

These are the results produced for NPR by Microsoft’s Image Creator after given a prompt to produce a realistic-looking aerial photo of Rafah, with the phrase “all eyes on Rafah” superimposed among the tents.

Summary

Allegedly AI artwork travels farther and wider than traditional photography according to our new friend Amirul Shah (the Fake Maker). We also learned that his acclaimed identical render is not possible. So he most definitely borrowed/stole, which is what AI is more-or-less about. But he needs to tell the whole truth so another artist can collect the praise (and the clicks he’s received as a result).

We have been asked if we are available to consult with agencies and brands. Yes we can. Please send inquiries to theadstack@adverstuff.com

AI /Content Production - CMS Products & Workflow - Internal or External Agencies - Production Requirements - Exploratory & Optimization Projects - Pitches - and more

Brands/Agencies

What can/should I being doing now to protect my brand visual assets?

Present options are limited. A combination of tools need to be implemented to protect brand assets. We will cover this soon.

The Future is “Photoguard” or Similar Integrated Into Your CMS Workflow.

Unfortunately it is not commercially available. Researchers at MIT have developed a tool called “PhotoGuard” that aims to protect images from unauthorized AI alterations. PhotoGuard disrupts the data within an image that AI models use to understand what they’re looking at. It puts an invisible “immunization” over images, preventing AI models from manipulating them.

This technique is particularly relevant in an era where advanced generative models can create hyper-realistic images with ease. By altering photos in tiny ways that are invisible to the human eye, PhotoGuard ensures authenticity and guards against misuse. Ad agencies and brands could explore using PhotoGuard to safeguard their digital assets from unauthorized alterations.

Consider “Glaze” as Above.

Another tool that works in a similar way is called Glaze. But rather than protecting people’s photos, it helps artists prevent their copyrighted works and artistic styles from being scraped into training data sets for AI models.

Glaze, which was developed by a team of researchers at the University of Chicago, helps protect Intellectual Property. Glaze “cloaks” images, applying subtle changes that are barely noticeable to humans but prevent AI models from learning the features that define a particular artist’s style.

Glaze corrupts AI models’ image generation processes, preventing them from spitting out an infinite number of images that look like work by particular artists.

PhotoGuard has a YouTube demo online that works with Stable Diffusion (open source). You’ll need your GitHub chops. Artists will soon have access to Glaze. It is currently in beta testing the system and will allow a limited number of artists to sign up to use it later this week.

A Larger Solution is Required.

Mr. Zuckerberg and Colleagues Need to Lean In on This Sucker.

The most effective way to prevent our images from being manipulated by bad actors would be for social media platforms and AI companies to provide ways for people to immunize their images that work with every updated AI model.

In a voluntary pledge to the White House, leading AI companies have pinky-promised to “develop” ways to detect AI-generated content. However, they did not promise to adopt them. If they are serious about protecting users from the harms of generative AI, that is perhaps the most crucial first step.

Deeper Learning

Cryptography may offer a solution to the massive AI-labeling problem. That is another conversation.

Edition 33 sources:

NPR

June 3, 2024 12:15 PM ET - ‘All eyes on Rafah’ is the Internet's most viral AI image. Two artists are claiming credit’ by Bobby Allyn

404 Media

May 31, 2024 at 11:15 AM - Viral ‘All Eyes on Rafah’ Image Came From Facebook Group to Make ‘AI Industry Prosper’ by Jason Koebler

BBC

June 1, 2024 - ‘All Eyes on Rafah: The post that's been shared by more than 47m people’ by Alys Davies & BBC Arabic, BBC News

If there is no copyright, and they are created from stolen works (because let's be honest, they are) what's to protect? How about we stop endorsing the use of tools that are trained on scraped content - including our own personal photos around the internet, and the ads our industry makes? That seems like the sustainable solution.

Matt - Thanks for reading. I kept pics of my kids off the web for reasons like these. Had family pull down pics of them as well. Nothing much you can do about "borrowed" works short of public shaming in the town square. Firefly trains on Getty stock images (right) and one other stock library. Probably the way to go.