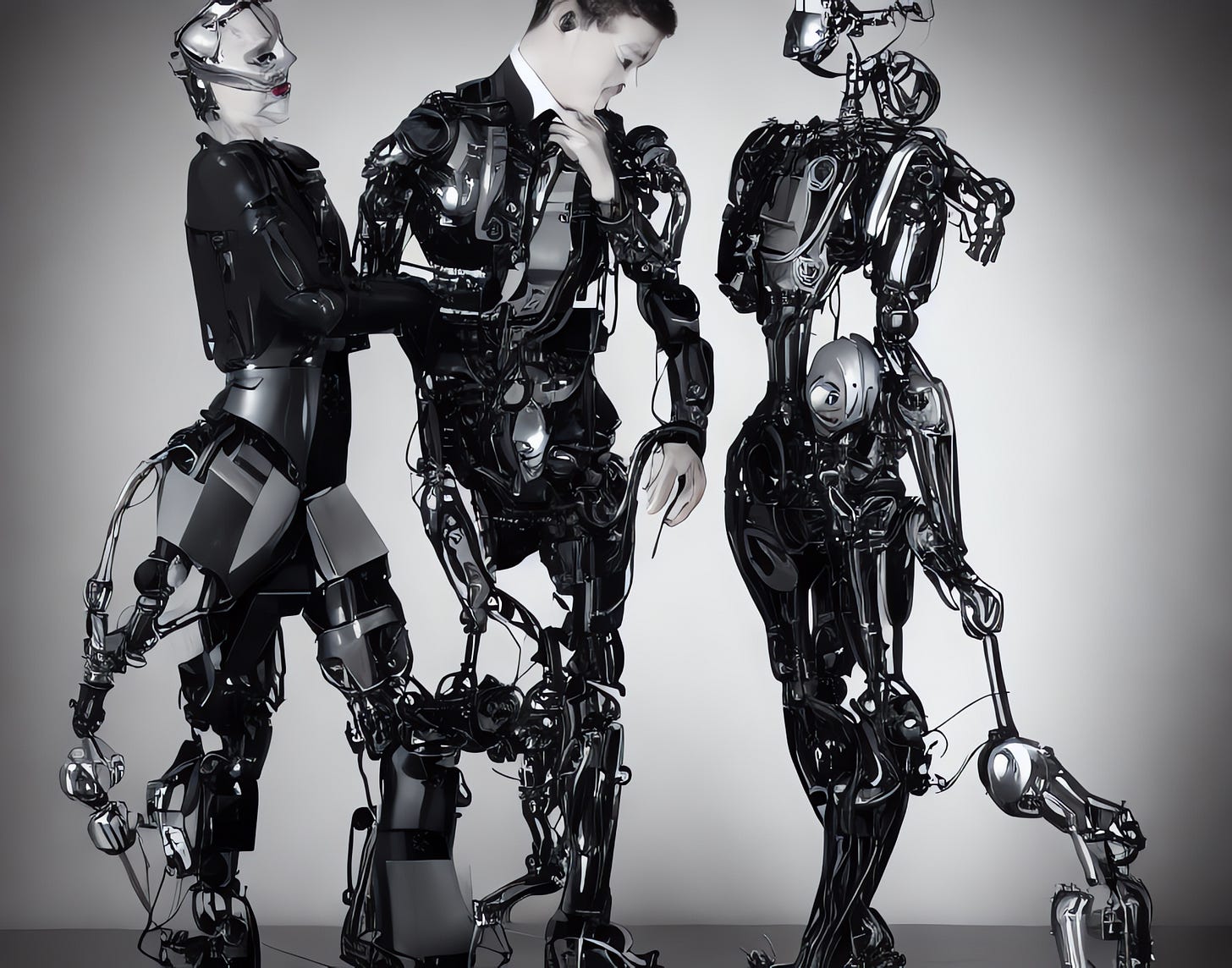

Tomorrow People - Midjourney Experiment 1

Edition 2 - AI Producering

Summary: What happens when you enter the same prompts in Midjourney and Stable Diffusion? They are similar products. Or are they? My project name was originally “Robot People.” I was thinking of Cyborgs, but for some reason I never used that word in the prompts.

Background: Stable Diffusion and Midjourney (along with DALL-E 2) are two of the better-known AI image generators. You enter what are called “prompts” to describe and control camera angle, lighting, physical objects, weather, and themes that affect everything in the image.

The prompts: Style of FuturoFrankenstein, realistic photograph, intimate robotic husband and wife.

** the style of FuturoFrankenstein is a Midjourney-specific style but I was also curious how Stable Diffusion would interpret it. I’ll do more of these in the future.

Stable Diffusion

These feel like Andy Warhol robot art. It's weird and odd in every way. I dig it. BTW - his catalog of drawings/comic books were just published as a collection so I've got Warhol on the brain. Check out Taschen for details on the books.

What is relevant in these examples is I used Stable Diffusion and Midjourney to produce the images. I used the same prompts to compare and contrast. While I was going for more of a cyborg look, for some reason I never used "cyborg" as a prompt.

The Stable Diffusion renders feel raw. Unfinished. Parts are missing. Lacking balance and symmetry. These are some of the best things about it. It pushes your imagination to image the backstory.

Gen-2

MOTION TEST (below)

In this example, using Runway Gen-2 (a third app), the single frame provided is interpolated in two major ways. Firstly, motion is added to each of the four models featured in the still image. And secondly, a surprise. An entirely new scene with two "more finished" subjects is introduced.

Midjourney

The Midjourney examples below are all color and detailed. More photo real with a finished presentation. More purpose in the design.

Gen-2

MOTION TEST (below)

In this example, using Runway Gen-2 our subjects move around a bit. Not spectacular, but it’s still rather mind-blowing in concept that foreground and background can be differentiated in a .png rasterized (flat) image.